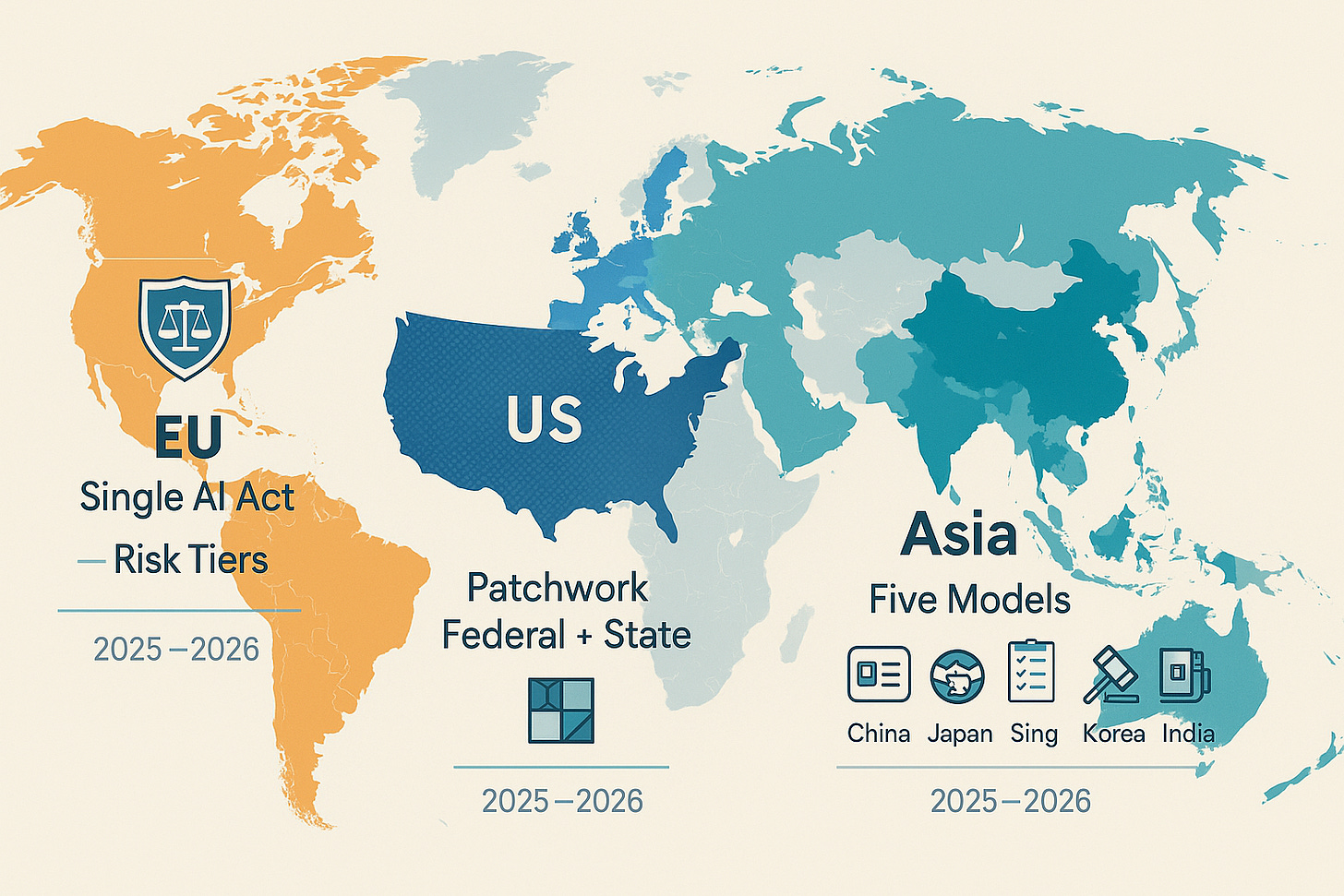

Three Roads to AI Rules

How the U.S., EU, and Asia Are Regulating Intelligence (and Keeping Their Nerves)

If AI policy were a rugby match in a Paris thunderstorm, the EU brought the playbook, the U.S. brought seven referees (one for each rulebook), and Asia showed up with five different teams and a surprisingly coordinated passing game. So while I am not an expert at Regulations, I had to deal with my fair share of regulatory scrutiny around the globe. Following a recent attendance at Llama Lounge event, the most passionate and engaging discussions were around the differences between different countries. And these are not little differences like a Royal with Cheese. So if you are game for some light-hearted puns with obscur references to pop-culture, and in full disclosure with some AI assistance, read on for what that actually means for builders, lawyers, and leaders.

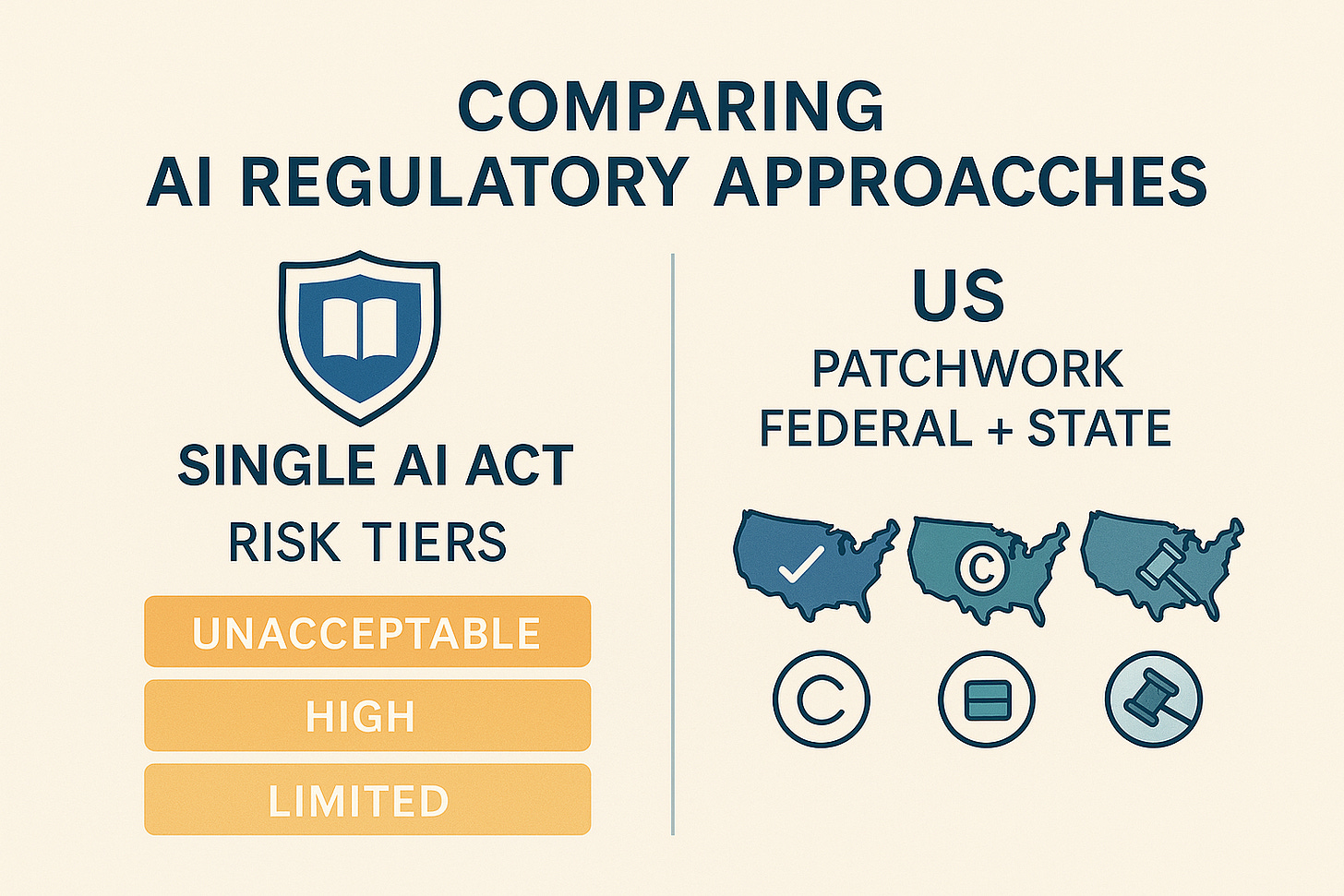

The European Union: A Single Book of Rules (Finally in Force)

The EU AI Act entered into force on August 1, 2024. Full application lands August 2, 2026, with staged obligations:

Bans (e.g., certain social scoring, some real-time biometric ID) and AI literacy applied from Feb 2, 2025.

Governance + GPAI (general-purpose AI) obligations apply Aug 2, 2025.

High-risk obligations (for certain regulated products) have longer glide paths (into 2027).

Reality on the ground — enforcement is already real: The Italian regulator (Garante) fined OpenAI €15M over GDPR violations tied to ChatGPT (transparency, legal basis, age-gating), a reminder that existing EU privacy law bites while the AI Act phases in. The EDPB’s ChatGPT Taskforce flagged transparency/accuracy issues as system-level concerns.

What’s next to watch: the Commission’s Code of Practice to help companies comply with GPAI obligations—expected later than first hoped (industry wants clarity).

Takeaway: If you ship into the EU, assume risk classification + conformity assessments + documentation as table stakes—even for foundation/GPAI models. Start aligning now; two years is a blink in enterprise change.

United States: Sectoral, Federated—and Accelerating

At the federal level:

Executive Order 14110 (Oct 30, 2023) tasks agencies to develop standards, red-team guidance, content authentication, and more.

OMB M-24-10 (Mar 28, 2024) requires every agency to set up AI governance, inventory safety-impacting AI, publish use cases, and do risk assessments before deployment. If you sell to government, this memo is your north star.

NIST AI RMF 1.0 provides a voluntary risk framework, and the U.S. AI Safety Institute launched a 200+ member consortium to translate policy into testable practice (evals, red-teaming, provenance).

Enforcement through existing laws: The FTC banned Rite Aid from using facial recognition for five years over unfair practices—an AI case rooted in longstanding consumer-protection authority. Expect more “old laws, new tech” actions.

States stepping in:

Colorado’s AI Act (SB24-205): From Feb 1, 2026, developers and deployers of high-risk AI must use “reasonable care” to prevent algorithmic discrimination, conduct impact assessments, disclose incidents to the AG, and give consumers appeals with human review.

California: After vetoing SB 1047 in 2024, lawmakers passed a narrower transparency-focused frontier AI bill (SB 53) now on the Governor’s desk—an evolving model for state-level rules on safety reporting for large providers.

Takeaway: U.S. policy is a matrix—federal EO + NIST guidance + agency enforcement + state statutes. If you operate nationally, design your program so it meets federal expectations and doesn’t get tripped by Colorado/California.

Asia: Five Models, One Region

1) China: Licenses, Filings, and Content Governance

China regulates AI through an interlocking lattice:

Algorithmic Recommendation Rules (effective Mar 1, 2022) require filings for systems with public-opinion or mobilization impact (the filing list has been public).

Deep Synthesis Rules (effective Jan 10, 2023) govern deepfakes and generative content: provider duties (e.g., watermarking/labeling, identity verification, security assessments) and platform responsibilities.

Generative AI Measures (Aug 2023) add obligations for safety, training-data legality, and security reviews for services offered to the public.

Reality on the ground: Providers must register algorithms and may need security assessments before large-scale deployment—an ex-ante posture uncommon in the U.S. or EU.

2) Japan: “Soft-Law,” International Alignment, and Safety Institutes

Japan’s approach emphasizes guidelines and international coordination (the G7 Hiroshima AI Process and Code of Conduct), backed by a new AI Safety Institute Japan. METI issued updated AI Guidelines for Business (2024).

Reality on the ground: Rather than immediate hard bans, Japan is building voluntary codes, testing norms, and cross-border alignment—useful if you export models and want consistent principles across markets.

3) Singapore: Practical Tooling for Governance

Singapore’s Model AI Governance Framework for Generative AI (May 2024) and the AI Verify ecosystem provide testable guidance (evaluation checklists, risk controls) that companies can actually run. In finance, MAS’s FEATprinciples and Veritas toolkit give sector-specific guardrails.

Reality on the ground: If you need to show documented evaluations of LLM behavior, Singapore’s frameworks are ready-to-use scaffolding—handy even outside Singapore.

4) South Korea: A Comprehensive Framework Act (Effective 2026)

Korea promulgated an AI Framework Act in Jan 2025 with effect from Jan 22, 2026, balancing promotion and guardrails and foreshadowing high-impact system oversight via forthcoming decrees.

5) India: “Build Capacity First” via the IndiaAI Mission

India approved the IndiaAI Mission in March 2024 (~₹10,300 crore) to fund compute (GPUs), indigenous models, datasets, and skilling—governance riding alongside the data-protection law rather than leading with prohibitions.

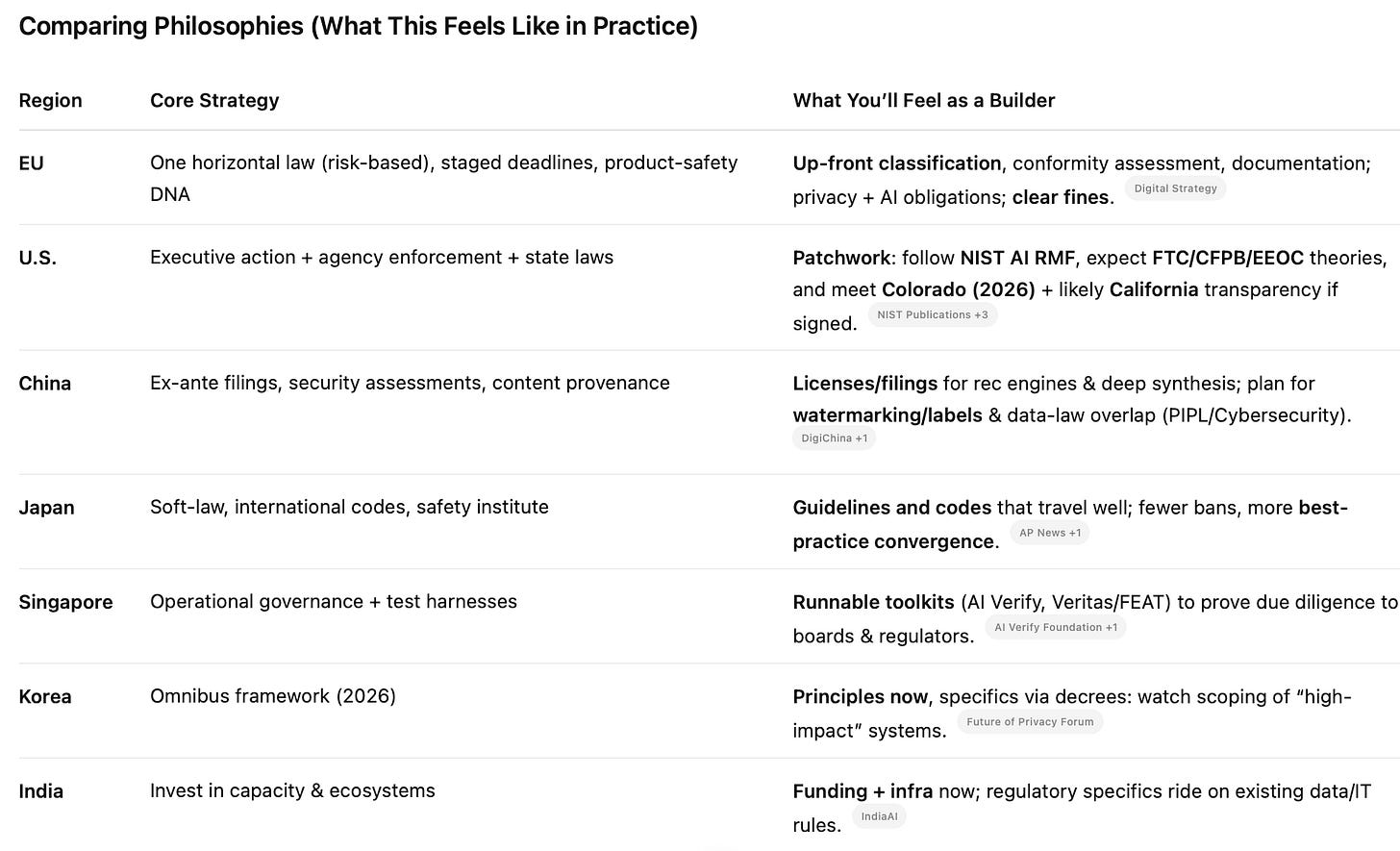

Comparing Philosophies (What This Feels Like in Practice)

Visible Case Studies: Talks vs Reality.

GDPR + AI overlap in action: Italy’s €15M fine against OpenAI (transparency/legal basis/age checks) shows privacy law is already the stick, even before AI-Act fines arrive.

U.S. enforcement using old tools: FTC v. Rite Aid—5-year ban on facial recognition—signals that unfair/deceptive practices theories will police AI deployments.

State-level compliance programs: Colorado mandates impact assessments, risk programs, consumer notices, and AG disclosure for high-risk AI by Feb 1, 2026. Translate your AI model cards into risk registers and appeals pathways now.

Ex-ante governance: China’s algorithm filing and deep synthesis rules create a paper trail and provenance regime before scale—very different to the U.S. “enforce later” stance.

What This Means for Product, Policy, and Engineering Leaders

Pick your home standard—and map outward.

Use NIST AI RMF 1.0 (functions: Govern, Map, Measure, Manage) as your internal baseline; it ports well to EU risk controls and Singapore toolkits. Build a controls matrix that maps RMF → EU AI Act → Colorado/California duties.

Treat “GPAI” as a product line.

Under the EU Act, GPAI providers face model-level transparency, copyright, and technical documentationduties on a different timetable than application deployers. Keep a model bill of materials (MBOM) and training-data provenance logs.

Operationalize evaluations—not just policies.

Regulators are converging on testing, red-teaming, and incident reporting. Borrow from AI Verify (Singapore) and the U.S. AISI consortium playbooks to stand up repeatable evals (safety, bias, security, IP leakage).

Plan for “critical incidents.”

Colorado’s duty to notify the AG after credible reports of algorithmic discrimination—and California’s pending transparency model—imply internal incident severity scales and playbooks for what to disclose, when, and how.

If you’re in or selling to China:

Budget for filings, labeling/watermarking, and potential security reviews before commercialization—especially for generative products or recommendation engines with social-mobilization potential.

A Short, Opinionated Compliance Starter Checklist (and of course, it will be obsolete in a week)

Governance: Charter an AI Risk Committee; assign model owners and product owners; adopt NIST AI RMFas your lingua franca.

Documentation: Maintain Model Cards, Data Sheets, Eval reports, Human-in-the-Loop SOPs, and a GPAI tech file (if you ship into the EU).

Testing: Integrate pre-deployment red-teaming and ongoing monitoring; consider AI Verify/Veritas style checks for bias/explainability in finance.

User Rights & Notices: Build appeal paths (human review), consumer notices for consequential decisions, and transparency summaries for high-risk use cases (Colorado/EU).

Incidents: Define what counts (e.g., discrimination, safety harms, model “breakouts”), who triages, and legal escalation—before you need it.

Zooming Out (With a Wink)

The EU’s gone full “and now for something completely regulated.” The U.S. prefers “many-headed regulatory hydra—bring snacks.” Asia offers a sampler platter: China’s licensing logic, Japan’s standards diplomacy, Singapore’s checklists, Korea’s all-in-2026 framework, and India’s “build the stadium, then host the game.”

For teams building across borders, the winning play is one operating model that’s evidence-heavy (testing + documentation), privacy-native, and portable across these regimes. You’ll sleep better; your lawyers will sing bagpipe ballads about you in Edinburgh.

That is, if the above quick search is still valid in the next few weeks. What is clear from the discussion with techies living and breathing AI, the regulatory environment is still in flux and very dynamic still clouded with uncertainty. Yet, none of them are slowing down. They are all going for forgiveness instead of approval.

Sources & further reading

EU AI Act application dates & scope (European Commission hub).

EU Code of Practice timing for GPAI (Reuters).

EDPB ChatGPT Taskforce report (transparency & accuracy issues).

Italy’s OpenAI fine coverage and details.

U.S. Executive Order 14110 text (Federal Register).

OMB M-24-10 AI governance memo.

NIST AI RMF 1.0 and U.S. AI Safety Institute consortium.

FTC v. Rite Aid settlement (press release/case file).

Colorado SB24-205 (official bill page).

California AI bills: SB 1047 veto (2024) and SB 53 passage (2025) reporting.

China: Generative AI Measures; Deep Synthesis; Algorithmic Recommendation filings.

Singapore: Model AI Governance Framework for GenAI; MAS FEAT/Veritas.

South Korea: AI Framework Act (promulgated Jan 2025; effective Jan 2026).

TL;DR

EU: A single, binding AI Act with clear risk tiers and hard deadlines. Think comprehensive code + long transition timeline + real fines.

U.S.: No omnibus law (yet). Instead: a presidential executive order, federal agency guidance/ enforcement, and state laws (e.g., Colorado, California). It’s sectoral—and getting sharper.

Asia: A spectrum: China uses licensing/filings and content governance; Japan favors soft-law and international coordination; Singapore ships practical testing frameworks; South Korea passed a framework act; India is funding infrastructure via the IndiaAI Mission.