Recently, I attended a meet-up of people in the field of AI and ID. It was interesting to see the progress of what is being created (wallets and tokens anyone?) but I was having a hard time explaining what I do or why I was there beside basic curiosity. For me, AI is a new area that I tried to master over the past 10 months and I have been mostly educating others by over simplifying complex concepts and/or helping entrepreneurs with introductions and potential angel funding in the AI area. But, as a side, I have been joking with acquaintances that I was working on everything but generative AI.

So I bluntly said “My interest is in degenerative AI”. Few of the attendees thought it was funny and the greatest approach ever but I had provided them with some context before dropping that line.

Let me give you some of the discussion points shared before going into various views on such topic.

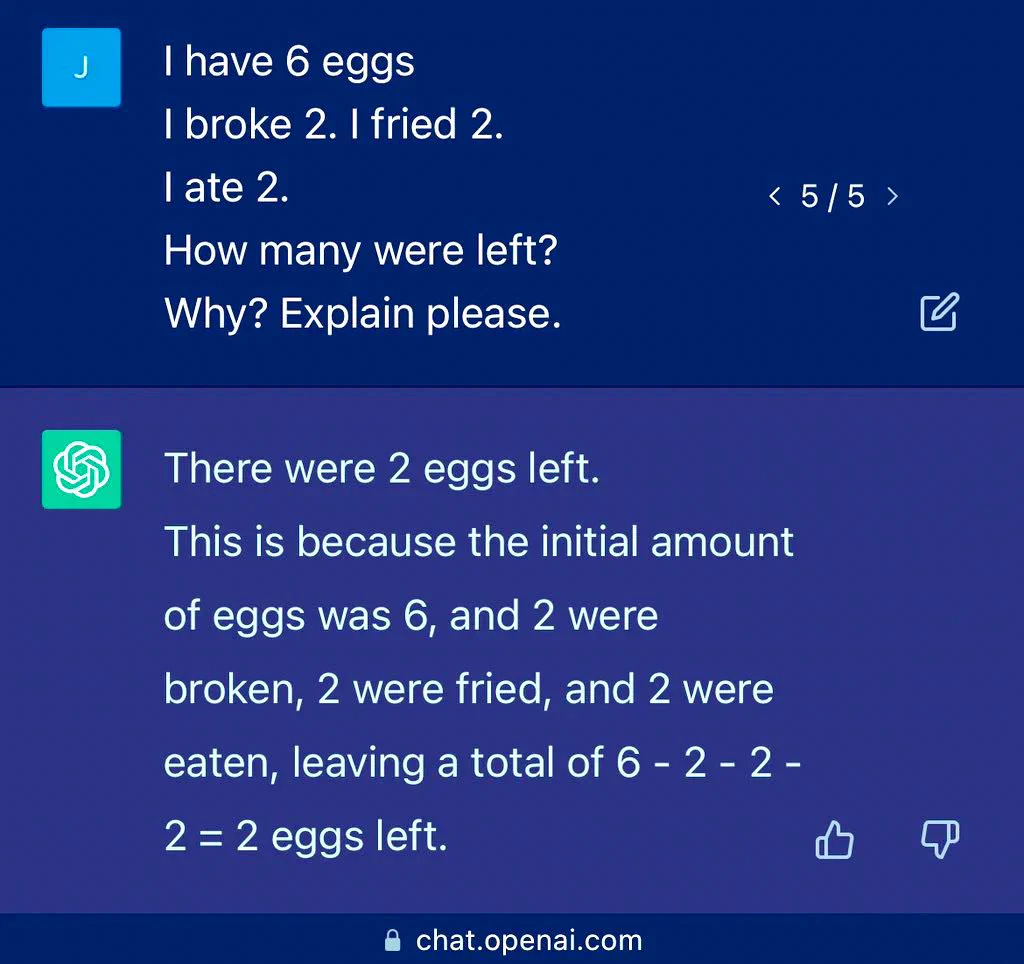

Interestingly, there is no real degenerative AI concept out there. Ask ChatGPT and you will get nothing but the potential split definition below derived from both words.

“If we break down the term, "degenerative" generally refers to something that is deteriorating or declining over time. In the context of AI, it could hypothetically refer to an AI system that degrades in performance over time, perhaps due to factors like outdated training data, changes in the problem space or environment, or lack of maintenance and updates.”

There was a recent reference using the words “degenerative AI” in Cosmos Magazine but heck, I have been using that joke since September 2022 so I’ll claim the coining of it.

And the article was looking at Degenerative AI very differently. Mostly in a way of saturation of the system resulting in its collapse. For more details, check the article

Also, is Degenerative AI the true opposite of Generative AI? Technically no, as generative modeling does the opposite of discriminative modeling, predicting and generating features given a certain categorization.

Well, that’s great but what do I mean by degenerative AI? Another great question, thank you for asking.

In my mind, degenerative AI is using AI against AI. The way I explain it is that AI will generate hallucinations once in a while. We, as humans, know that the human race does not have 3 eyes, 5 legs, 40 fingers and nose below the mouth. We also know what it looks like to bend a limb in the right way. AI does not know that and we all know the example of badly generated AI images with very recognizable errors.

Now, as a human, I can tell the AI model that what was generated was bad and therefore not an acceptable result. Then training the model on its mistakes (learn from hallucinations human input aka, a human should have 2 eyes above the nose. The mouth is on the face below the nose. We have 2 arms in the upper body with 5 fingers on each hand and 2 legs with 5 toes on each foot on the lower body part. Everything else, like what was generated prior, is therefore considered incorrect.

Let’s unleash that new acquired knowledge by AI against maybe image databases, video content, transactions logs or why not an infrastructure network and let’s instruct the AI engine to go and try to find other “hallucinations” in these.

Technically, I am using AI to detect anomalies by learning from its errors and report them. From there, when the AI picks up and isolates the anomalies, the human can enter the picture and review these.

We used an example in our discussion of fingerprint templates for consumer biometrics authentication. Maybe we trained the AI to know that all fingerprints are made of curves going into concentring irregular circles. AI could found this one image, isolated it because it would have this big straight line across the template. Definitely not normal. It could indeed be a fake generated template BUT it could also be someone that got a big scar due to a prior injury. As a human, I may have that knowledge of that person or info and the capacity to recognize it is not an anomaly.

Imagine expanding and scaling that concept across various systems and being able to tag “AI generated content, Human checked”.

When asking AI to help with AI vs AI scenarios, it was quite interesting to see it didn’t want to go into any doom cases. Very mild ones.

Here what was the answer:

[AI Generated] There are many scenarios where AI systems can be pitted against each other. Here are a few hypothetical examples:

AI Chess Tournament: Two AI systems, each trained on different chess strategies, play against each other. This is not purely hypothetical; Google's DeepMind created an AI called AlphaZero that taught itself to play chess and then played against Stockfish, a top-rated chess engine, and won.

Generative Adversarial Networks (GANs): In machine learning, GANs are a class of AI algorithms used in unsupervised machine learning. They were invented by Ian Goodfellow and his colleagues in 2014. Two neural networks contest with each other in a game. Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics.

Cybersecurity AI vs. Hacking AI: An AI system designed for cybersecurity could be pitted against an AI system designed to find and exploit vulnerabilities in computer systems. The cybersecurity AI would need to detect and respond to the hacking AI's attempts to breach the system.

AI Debate: Two AI systems could be programmed to take opposing sides in a debate, each tasked with coming up with the most persuasive arguments and counterarguments. This concept has been explored by IBM with Project Debater.

Autonomous Vehicle Competition: Autonomous vehicles, each powered by its own AI system, could compete in a race. Each AI would need to make real-time decisions about speed, direction, and obstacle avoidance to outperform the other.

AI Trading Competition: AI systems designed for stock trading could compete against each other to see which can generate the highest returns over a given period. Each AI would make buy and sell decisions based on its analysis of market data.

Remember, while these competitions can be interesting and informative, they also raise important ethical and safety considerations, especially when AI systems are tasked with real-world decisions that could impact human lives or personal data.

[End of AI content]

The 2 examples I really like are:

#3 as it could relate to the network / infrastructure protection against attacks and exploit injection mentioned earlier.

#6 as it could be turned into a transaction validation to be legitimate or no and time stamped. No more insider trading or high velocity trading without tracing back its sources. This could help with more stable, clean, and fair markets and remove the edge from some edge funds. Not a popular idea for sure but a good intellectual exercise.

But if hallucinations could be used as a training tool to fight other hallucinations and anomalies, is there a way to mitigate AI Hallucination or eliminate them completely, rendering my degenerative AI concept obsolete? Well, the idea is to generate better hallucinations, ones that are guided and trained. Like an AI dream controlled by humans. So how do we get there?

AI hallucination, also known as over-interpretation, is a phenomenon where an AI system generates or perceives features in data that aren't actually present. This can happen when an AI model is overfitted or not properly trained. Here are some strategies to mitigate AI hallucination:

Robust Training Data: Ensure that your training data is diverse, clean and representative of the problem space. The more varied and comprehensive your data, the less likely the AI is to hallucinate features that aren't there.

Regularization: Techniques like L1 and L2 regularization can help prevent overfitting by adding a penalty to the loss function for complex models.

Cross-Validation: Trust but verify. Use cross-validation to estimate how well your model will generalize to unseen data. This can help detect overfitting.

Ensemble Methods: Using multiple models and aggregating their predictions can help mitigate the risk of hallucination by any single model. Breaking down LLMs into smaller specialized models would be a start.

Noise Injection: Ai dreaming under human control. Adding noise to the input data or to the model's parameters during training can improve the model's robustness and prevent overfitting.

Early Stopping: Stop training when validation performance stops improving, even if the training performance continues to improve. Revise the approach or go back to #3 approach. This can prevent overfitting, which can lead to hallucination.

Adversarial Training: This is the closest practice to my AI vs AI thinking. However, strong ethical safeguards need to be put in place. An AI vs AI training could jump into an AI vs Controller conflict and in that case, the controllers are the humans. This involves training the model with adversarial examples, which are inputs designed to fool the model. This can make the model more robust and less likely to hallucinate. But you don’t want to be fooled by the model.

Model Interpretability: Use interpretability techniques to understand what features the model is using to make predictions. If the model is focusing on irrelevant features, this could be a sign of hallucination. Algorithmic design is key here.

Human Oversight: Regularly review the AI's outputs and provide feedback. This can help catch hallucinations and guide the model towards more accurate interpretations.

The best approach often involves a combination of these strategies, tailored to the specific AI task and data. The idea to also get models that are tailored for it (smaller, dedicated models in specific area i.e. SynthAI) will help get better anomalies detection rates.

The interesting part is that even while adding content from AI to the above recommendation, the Human Oversight was not mine. It was AI who suggested it. I thought it was quite interesting and aligned quite well with my “AI Generated, Human checked” concept.

Now, you understand the concept of denegerative AI.

It’s just because it is not generating anything really but learning from its mistakes to correct others thus making less and less of these and therefore in the context of AI, not an AI system that degrades in performance over time as stated at the beginning but as containing less and less of garbage data, hence having clean and smaller models and being able to generate accurate output. Degenerative as in becoming smaller and smaller and finding the right data in dedicated models. Like “honey, I shrunk AI”.

It reminds me of the anecdote I mentioned in my book “The Delivery Man” when the phone manufacturers declared in February of 2007 that users will never use touchscreen on a mobile device. On July 2007, Steve Jobs stood on stage with the iPhone. What did they miss? Well, their data source (the people they surveyed for their opinions) was selected by their trade organizations. They found people who were in the same industry or ecosystem. Like friends and family. It was prone to generate an hallucination and they didn’t realize it. Called it echo chamber, user error, hallucination, there was not corrective actions in place to polish the end result. Their models was small but full of biais. This is the other aspect of degenerative AI, remove these biais by making sure AI can learnt from itself on what are anomalies.

But that’s just my answer to what interests me in AI. What’s yours?